VIRTUAL PRODUCTION & MOTION CONTROL

CASE STUDY

THE RISE OF MOTION CONTROL IN VIRTUAL PRODUCTION — WITH RITE MEDIA

Does creativity drive technology, or does technology drive creativity? In film and TV production it can often be both. However, the ability to imagine and build increasingly complex and realistic virtual environments often results in actors, directors and camera operators working within vast, empty, green-walled spaces relying on their imaginations to bring a performance to life. But that could all be about to change.

GOODBYE TO GREENSCREENS

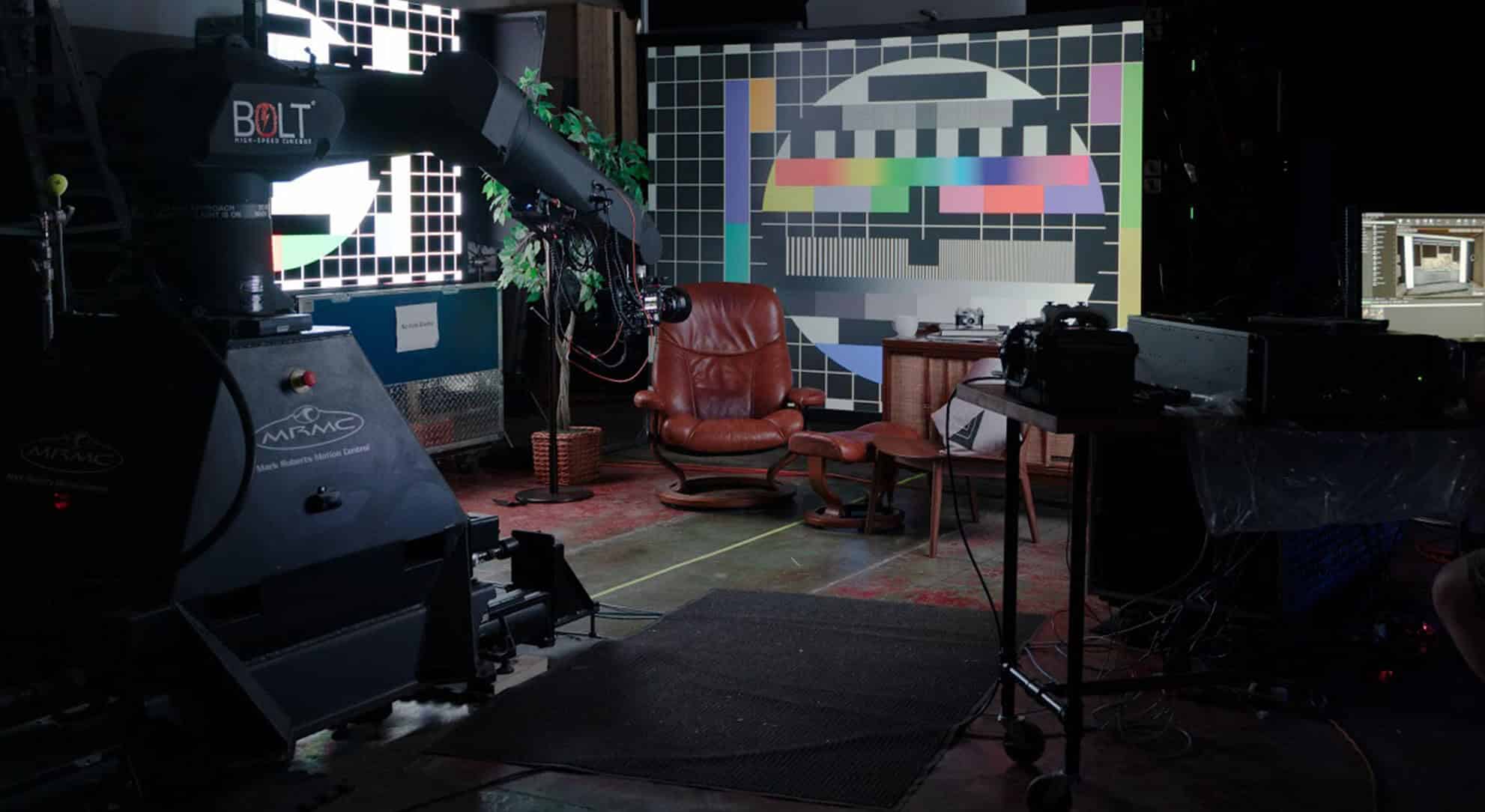

On the sets of the future, green screens will be replaced by LED screens displaying high-resolution dynamic backgrounds. The screens will produce realistic lighting effects, bringing any location, real or imagined, onto the set and enable the same ‘natural’ lighting conditions to be maintained for as long a needed – or accurately reproduced weeks later. The generation of authentic colours will immerse the actors and props within their environment, and many of the lighting problems associated with green screens, including the need to correct any ‘spill’ in post, will be removed.

On the sets of the future, green screens will be replaced by LED screens displaying high-resolution dynamic backgrounds. The screens will produce realistic lighting effects, bringing any location, real or imagined, onto the set and enable the same ‘natural’ lighting conditions to be maintained for as long a needed – or accurately reproduced weeks later. The generation of authentic colours will immerse the actors and props within their environment, and many of the lighting problems associated with green screens, including the need to correct any ‘spill’ in post, will be removed.

IF WE GET A CAMERA MOVE THAT WE’RE REALLY HAPPY WITH BUT DECIDE THAT WE WANTED TO TWEAK SOMETHING, WE CAN DO IT AGAIN BUT NOT WORRY ABOUT A JIB ARM, STEADY CAM OR ANYTHING LIKE THAT

Post Production Supervisor and Solutions Architect, RiTE Media

REAL-TIME FEEDBACK

The integration of the real-time visual effects generated by Unreal and motion control Cinebot™ rigs like Bolt will offer new dimensions of creative control on set, allowing the full potential of Virtual Production to be realised. Motion control makes accurate multiple passes simple, allowing set or lighting changes to be made easily and for additional VFX to be added in post.

A key component of this integration is RiTE Media’s LiveLink plugin. For this project, LiveLink took the data from the Bolt and automatically converted it to Unreal’s coordinate space in real-time. The plugin minimises any potential rendering lag, essentially tethering the virtual camera in Unreal to the robotic camera, in this case, Bolt, on set.

The integration of the real-time visual effects generated by Unreal and motion control Cinebot™ rigs like Bolt will offer new dimensions of creative control on set, allowing the full potential of Virtual Production to be realised. Motion control makes accurate multiple passes simple, allowing set or lighting changes to be made easily and for additional VFX to be added in post.

A key component of this integration is RiTE Media’s LiveLink plugin. For this project, LiveLink took the data from the Bolt and automatically converted it to Unreal’s coordinate space in real-time. The plugin minimises any potential rendering lag, essentially tethering the virtual camera in Unreal to the robotic camera, in this case, Bolt, on set.

WE CAN KEEP MAGIC HOUR GOING ON FOR THREE DAYS IF WE NEED TO!

Post Production Supervisor and Solutions Architect, RiTE Media

POWER OF MOTION CONTROL

The pre-visualisation power of motion control also has a big part to play in Virtual Production. MRMC’s Flair motion control software, connected directly to Unreal via LiveLink, can be used to generate real-time feedback for multi-axis camera moves and focus points as the sets and locations are still being designed. This presents the opportunity for long, complex movements of highly detailed backgrounds to be pre-rendered, further minimising any potential render lag on set. Virtual production is future-proofing filmmaking, and motion control robots, such as Bolt, are central to making it happen.

The pre-visualisation power of motion control also has a big part to play in Virtual Production. MRMC’s Flair motion control software, connected directly to Unreal via LiveLink, can be used to generate real-time feedback for multi-axis camera moves and focus points as the sets and locations are still being designed. This presents the opportunity for long, complex movements of highly detailed backgrounds to be pre-rendered, further minimising any potential render lag on set. Virtual production is future-proofing filmmaking, and motion control robots, such as Bolt, are central to making it happen.